Server virtualization has long promised unprecedented economic savings for IT; and, to be fair, there are some area where server virtualization has saved IT some money. However, the alluring promise of “huge” savings has never been realized. Why is that?

Virtualization’s promise has always revolved around better utilization of computing resources:

- Lowers hardware cost

- Improves operational expense in deploying / updating servers or instances

- Reduces floor space requirements while cutting power and cooling requirements

For the last decade, the general discussion when it comes to IT budgets is that 70% is spent on just keeping the lights on leaving a very small amount for innovation. Virtualization, and the theoretical cost savings associated were supposed to help with innovation. The reality, server virtualization did not move the needle between “just keeping the lights on” and “innovation”.

The reality is that while server virtualization did “fix” or help with some of the server/budget issues in IT, however is also “broke” other things within IT such as:

- Application service levels (causing a slow down in virtual machine deployments)

- Storage performance

- Networking performance and capabilities

(All stuff that can be, and in some cases has been fixed). It is the fact that things broke that dollars were not freed up for innovation but to fix the stuff that broke.

However, this brings up an interesting question. And as with EVERY question in IT, it yields my most favorite IT answer… “it depends” The question is what is the “desired state” of IT? Again, “it depends”, but as I dive deeper into the answer, it is interesting to note that “it depends” has many answers. The answer lives somewhere in space between assured application performance and utilizing the environment as efficiently as possible. This isn’t one state; it could be many states depending upon the applications and the resources available to support the environment.

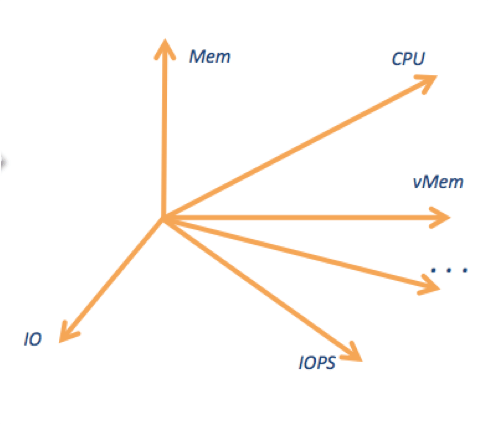

The easiest way to understand the concept of ITs “desired state” is by taking a look at a free market model. In a free market model resources are bought and sold and prices are determined by unrestricted competition between independent entities. In a virtual server world, the free market is made up of the applications. Application performance is what IT is trying to ensure for the users. Applications that are more critical receive a higher SLA or are given more money to spend in the free market. The resources to be bought or sold are things like:

- Memory, vMem

- CPU, vCPU

- Ready Q’s, Ballooning

- Networking

- Swapping

- IOPs

- Storage Latency

This is a multi-dimensional market and as you can imagine every application or virtual machine needs to buy resources. As demand goes up for a particular resource, so does its cost. For every virtual machine, trying to identify the right resources to assign is a very, very complicated task. If you only have one virtual machine you may deal with as many as 15 different resources to manage. Now with one virtual machine, chances are you don’t have to do a lot of work or make a lot of decisions to ensure an SLA for that virtual machine or application. Now imagine you have 20 virtual machines and now add the complexity of a multi-hypervisor environment.

As an aside, a colleague once asked at a trade show, “How many people here are running VMware?” all the hands went up. Then he asked, “How many of you run VMware exclusively?” only about 5% of the hands stayed up. IT is adding complexity to their environment every day.

As you can see you can be dealing with thousands or even hundreds of thousands of different resource utilization possibilities. This is a truly impossible task. However, IT still seems to want to have knobs to tweak and thresholds to set in order to try to control the environment. IT likes to look at reports stating that particular virtual machines or applications are starved for resources and try to make some determination as to how to “fix” the problem. The challenge is, by the time IT has looked at all the charts are reports and made a decision on what to do, the environment has changed and different applications or virtual machines are having different challenges.

So, back to the question, “What is the desired state of IT?” This is a balance of performance and cost. As long as all of the applications are meeting their SLAs the next question to ask is are they doing it at an optimal cost.

This can be very difficult to quantify. For example, all of your applications may be meeting their SLAs, but have you built an over provisioned environment? Do you have too many servers? Have you purchased too much memory? Do you have too many storage spindles? Better yet, have you invested in Flash/SSD to solve storage performance for virtual machines? Have you purchased storage tiering software to try to tell you what data (or application) is “hot” and should be on SSD. (The challenge here is that the tiering software has no visibility to the server so the data my be active, but the resource constraints are really at the host due to memory issues for example.) These tools and technologies are not cheap. Solid State Drives (SSD) and tiering software are very expensive and can end up being a bit waste of money, especially if they don’t solve the problem.

So, how can you ensure your applications are in the optimal operating zone while at the same time delivering on all the promises of server virtualization?

The real answer is with the proper tools and automation. The right tools that look all the way from the application to the spindles in the storage to ensure applications are running in the “optimal operating zone” or “OOZ”. Placing these applications in their optimal operating zone through automation removes a large burden from IT.

By providing the ability to place applications and virtual machines where they are utilizing the environment as efficiently as possible you are doing three things.

- Assuring true Quality of Service to all of your applications

- Saving a tremendous amount of money by not over provisioning server and storage resources

- Reducing overall IT complexity and management

Doing these 3 things all save money in multiple ways and free up IT time to help plan for data center innovation which can help reduce costs as well as develop a more flexible and agile infrastructure that can help test & development groups bring new applications on line faster, making the business more competitive and drive share holder value.

So the Desired State of IT – well, it depends…