As a part of being a storage evangelist, I spend a lot of time educating sales as well as customers on the merits of our capabilities and technologies. Every since IBM acquired TMS I have been hearing from our sales reps that it can be difficult to compete as others in the industry have capabilities in their flash solutions such as data deduplication.

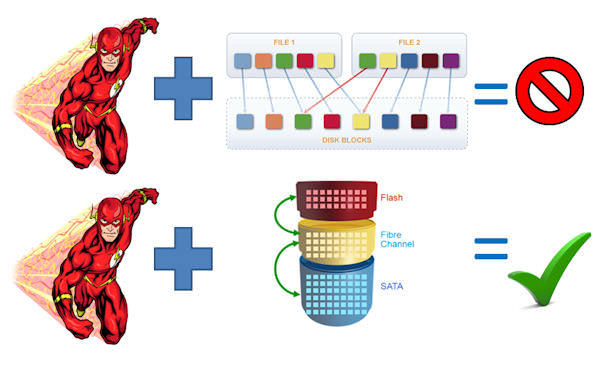

I spend quite a bit of time educating folks that deduplication on primary storage is really a waste of storage resources. The reason why deduplication is so effective for backup, when you think about the amount of daily, weekly, monthly and yearly backups users keep, by deduplicating the data, users can save some valuable disk space. Primary storage, on the other hand, doesn’t have a lot of repetitive data making deduplication for primary storage not really overly useful. In addition, the resources it takes to perform deduplication can have an impact on performance. Take this to the next level, especially when using technologies such as tiering, you are only putting “hot” data on flash / SSD storage. Typically you are only putting one copy of that data on flash, why would you need data deduplication? Not every copy of the data set is “hot”. If leveraging flash / SSD is specific for performance, why add the extra overhead of deduplication, when dedupe has a negligible affect? Leveraging smart tiering capabilities allows you to use less flash saving users more money than data deduplication would.

I wouldn’t be overly concerned that TMS doesn’t have deduplication built in. One of the capabilities TMS can take advantage of is IBM’s Real-time Compression (RtC). At a 50% compression ratio users can cut their $/GB in half. Compression can save more space on primary storage than deduplication, and RtC can do this in real time without any impact on performance. Users who take advantage of storage efficiency services like Real-time Compression can, at times, gain performance while cutting the overall cost of storage

Now this brings up some new news that hit the wire the other day. It seems that now NetApp is in the “all flash array” business, well they will be next year. What is interesting about the ComputerWorld article is that it states that NetApp has said that, “Next year, NetApp plans to release an purpose-built all-flash array known as the FlashRay, which will have its own unique architecture. NetApp has yet to decide on the operating system for the FlashRay…” The two big issues I see here are, first, if the array does not run the OnTap software then they will need to do a lot of work in order to ensure that the storage services that are offered in the all flash array (which are the really valuable components) are consistent with the current OnTap software. If not, the management of all of the data can become tricky. Secondly, and perhaps most importantly, the real value to flash/SSD is tiering capabilities. Flash is very expensive still. At IBM we have proven that with good tiering technology, you only need about 3% of your overall storage to increase your performance by as much as 300%. At this point in time NetApp doesn’t have any tiering software and this capability is difficult to develop when you want to get it right, see this post on EasyTier vs. FAST. Most customers don’t need an “all flash array” (unless of course that array ends up being only 3% of your storage – but that is for big customers). By combining intelligent tiering technology, users can get the performance they require with only a little flash and spend less. At the end of the day, budgets are still tight so the ability to get the most out of your storage, spending only what is really necessary, is true efficiency.