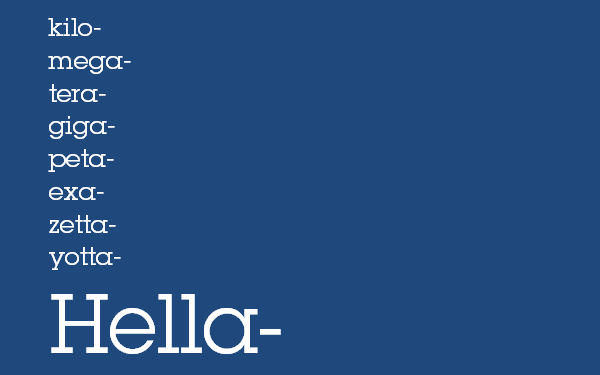

What had started out as a joke to a bunch of Californians has turned into “The Official Petition to Establish Hella as the SI Prefix for 10^27”. Today we have the Kilobyte, Megabyte, Gigabyte, Terabyte, Petabyte, Exabyte, Zettabyte and Yottabyte. Most would think that this would be enough to last us for a while, but apparently, it isn’t.

In 2009 we had accumulated about 1ZB of data. Just one year later, we were at almost 2ZB of data. In the span of just one year, we had accumulated as much data as we had from the beginning of time, to that moment. By 2020 we will have accumulated 35ZB of data. The time it takes us to grow 1ZB of data is dramatically shrinking, which is proving that data is not only growing, but it is growing at an exponential rate.

Let’s take a look at a company like Facebook. Today they have 10’s PB of capacity and grow at 500 Terabytes per day. This means that by 2019 they will have over an Exabyte of data. At some point they will grow so much, the will need a Hellabyte of capacity.

The fact that this conversation has come up and brings about another conversation I tend to have once a week that is very relevant to this topic. Every week I am asked, “What is a ‘Storage Efficiency Evangelist’?” I tell most people that I am pretty passionate about storage, but I really don’t care about “disk”. The true value to disk today is the software that surrounds the disk that allows it to hold a great deal more capacity, I call these storage services. At the end of the day, we can’t make disks any bigger, physically, we can’t spin disks any faster, we can’t add more heads to the disk to read more data off these drives, so at some point, we will hit the point of diminishing returns where a 3.5inch or 2.5 inch disk drive will only be able to store a maximum of x amount of capacity. So how do you overcome this? Adding more disks to your overall storage infrastructure isn’t the best answer. At some point companies just simply run out of room or can’t afford to keep adding more capacity. To overcome this users need storage efficiency services that allow them to “get more out of what is on the floor” so to speak. For example, leveraging capabilities such as compression could, at 50% compression, allows you to double your usable capacity by 2x. This does a few things. It allows users to cut their overall $/GB in half. Additionally, it allows users to storage more data in a much smaller footprint. This cuts down on real estate, power and cooling, as well as management which all add up.

My goal is to evangelize storage efficiency services that users should be taking advantage of that will allow them to store more data, the stuff that actually adds value to their business, without having to spend more on physical disk to do this. Companies don’t make any money buying more disks. Companies make money when they can analyze more data to make more competitive business decisions. This is why storage efficiency features are a requirement today. In the blog post “Big Data is OUT, Real-Time Data is IN” I talked about having as much data on-line as possible so users can access it and perform real-time analytics on the information to gain more insights. If this is the new requirement, then being as efficient as possible with your storage is critical to keeping your costs low, driving up your competitive advantage.

Capabilities companies should be taking advantage of in their primary storage are:

- Virtualization

- Thin Provisioning

- Compression

- Tiering

Now there are other storage efficiency capabilities available for storage such as data deduplication, of which I am a big fan, however this technology is much more appropriate for backup storage capacity. Leveraging each one of these capabilities allows users to get back some percentage of their existing capacity, from 35% to as much as 80% depending on the service or services used and the characteristics of the data. I highly recommend that customers take a look at implementing one or many of these capabilities in order to start maximizing the disk they have in the data center. At some point, you may be able to store a Hellabyte of data in Terabytes of physical space, so I guess we will need the standard of measurement after all. I wonder what would come after “Hella”, “NeedExtraSpacea”?