“Storage Efficiency” has become a big topic over the past 12 months. There are a number of new technologies that have come out in the last few years that are helping to deal with storage growth. We all know that data is the root of the decisions that drive business today. The more data you have, hopefully, the better decisions you can make to drive your business to success. The question is, “what is the value (and hence the cost) of the infrastructure to create that success?” What we do know is that the ability to put more data in a highly efficient footprint can give your company a competitive edge. There are five technologies that can help an IT organization create an efficient storage infrastructure. These are:

- Tiering

- Virtualization

- Thin Provisioning

- Compression

- Deduplication

It is also important to point out that there are some semantics when talking about storage efficiency, specifically between efficiency and optimization technologies. I think it is useful to attempt to define these as they lead us to picking the right solutions for what we are trying to accomplish. For the purpose of this post, efficiency will relate to making existing capacity more useful and optimization will mean making more capacity out of existing capacity.

Using these definitions, technologies such as Tiering, Virtualization and Thin Provisioning are efficiency technologies. These technologies help to utilize the existing capacity that you have.

Tiering is technology that is used on about 10% of your data or less. It is used to move data that requires higher performance to flash storage. Good tiering technology analyzes data access patterns and moves the most active data to the highest performing disk. It doesn’t really change the amount of physical capacity that is required; it just changes what type of capacity is required and allows IT to make sure data is operating as fast and efficiently as possible.

Virtualization technology allows IT to make sure disk utilization is used as efficiently as possible. Until recently storage utilization rates were around 50%. By leveraging virtualization technology, IT can group pools of storage so they don’t need to purchase capacity needlessly. Virtualization can be used on 50% to 60% of your storage but it doesn’t change your physical capacity infrastructure requirements and at most allows users to take advantage of 20% to 40% of their capacity that they once didn’t access.

Similar to Virtualization technology, thin provisioning technology also can be used on 50% to 60% of your capacity however, thin provisioning technology gives IT about 10% to 40% of their capacity back. Thin Provisioning helps IT manage their existing capacity and their utilization by being able to make capacity available to users much easier again however it doesn’t change the amount of physical storage infrastructure required.

Optimization technologies help IT to better manage their physical storage footprint. Optimization technologies optimize existing infrastructure by allowing users to put more capacity in the physical same space. The two technologies that are currently used today are data deduplication and real-time compression.

Optimization technologies are a bit tricky. There is a balance that is required between optimization and performance and availability. At the end of the day, IT chooses the storage it buys with two very important characteristics in mind, performance and availability. Optimization technologies can not affect these characteristics. It is for this reason that data deduplication really isn’t ready for “prime time” on primary, active storage. Data deduplication creates too much of a performance impact on primary, active data. Today, data deduplication could be used on about 10% to 15% of the primary, less active capacity that is in the data center and only provides about 30% to 50% overall optimization. In other words deduplication technology can impact the physical infrastructure by as much as 10%, meaning IT may not need to buy as much physical capacity.

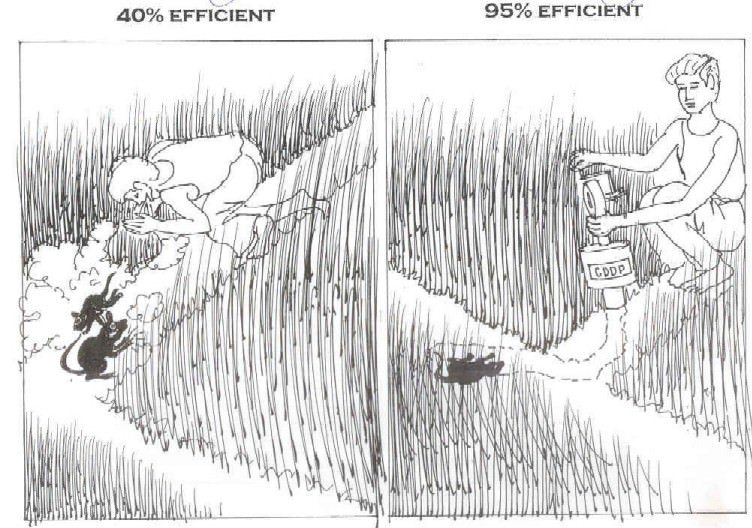

Real-time compression, on the other hand, has one of the most dramatic affects on primary storage capacity. Real-time compression can be used on as much as 85% of the storage footprint and can compress data between 50% and 80%. That said Real-time compression could have IT purchase as much as 70% less overall storage capacity. Real-time compression also does not affect the main characteristics for which users buy storage (performance and availability). IT could have as much as 70% less footprint but keep the same amount of data or more on-line. Additionally, IT can now purchase storage opportunistically without having to have such a dramatic impact on their infrastructure, process or budgets. This allows companies to keep more capacity on line and available to help companies do more analytics on more capacity and become more competitive.

When deciding which storage efficiency technology will have a more effective impact on your overall environment and budget, start with optimization technologies and start to get the data growth under control. Adding value to the line of business that can drive revenue with more data will make you a hero and your business more successful.