Server virtualization has changed the IT landscape dramatically. It has become a magic potion curing a number of ills in the physical server world such as low individual CPU utilization and excess use of space, power and cooling in the data center. However, like all potions that cure what ails you, there can be side effects. You need to be careful of what the Witch Doctor orders.

When I speak with customers who have aggressively implemented a virtual server infrastructure, 9 out of 10 will tell me that they underestimated the affect that virtualization would have on their backups and backup process and how backup might actually make virtualization less of the magic potion they had hoped, when not considered during the virtual server assessment and planning process. So what is the issue? Backup is a virtualization bottleneck, and without addressing it, you may not be able to obtain the server consolidation ratios you had been expecting which can have a negative effect on your virtual server TCO and ROI.

This is a timely discussion as VMworld has just concluded. VMware users flocked to VMworld looking for best practices when it comes to implementing virtual server technology. Because virtualization allows IT to reduce the overall physical hardware infrastructure, users will be looking at how to maximize their server consolidation ratios (get as many virtual servers on a physical server as they can and still provide good application performance).

I often hear that companies assess their environments by looking at the production applications on their physical server environment, identify their work loads and translating that into some consolidation ratio of physical servers to virtual servers. I also hear, from these same customers, that backup was never taken into consideration during the assessment phase when trying to identify the best possible consolidation ratios. These customers implement their new virtual server environments, install the backup agent they had previously been using for physical server backups and attempt to backup their virtual servers and they find that they would only be able to protect 50% to 60% of the new environment. Why?

Let’s look at the physics. Let’s say your virtualization ratio is 12 virtual servers to 1 physical server. Ten physical servers backup with 12 NIC cards, 12 CPUs, 12 Memory ‘chunks’, etc… When you moved these 12 physical servers into the virtual world and put them on one physical server did you put 12 NIC cards in the new physical server? Did you put 12 CPUs in the new server? Do you have 12x the memory? Chances are, probably not. However the capacity didn’t change did it? So how could one expect that the backup performance, which is I/O, memory and CPU intensive would operate well in a virtual world?

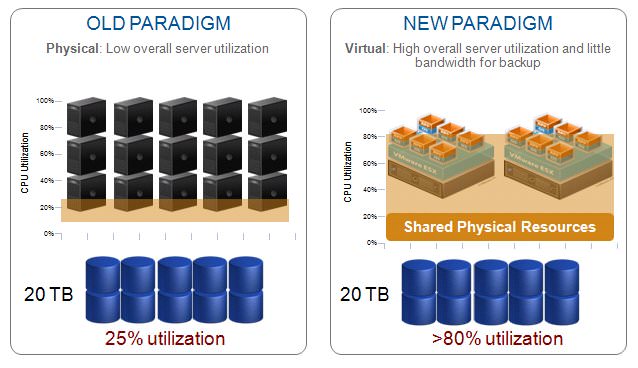

Diagram 1 below show how when you backup 12 servers, the resource drain on each server is roughly 25% (per system during a full backup). When you virtualize these 12 servers onto one or two physical servers, your physical system utilization shoots up to 80%+. This utilization can be so dramatic that it actually effects the number of virtual servers you can have on these systems which can ruin your virtual server TCO / ROI.

Simple math dictates, unless you have all the same resources on your new physical server as you did on all your physical servers before the consolidation, you won’t get the same backup performance. I have spoken with customers who aimed to do a 25 to 1 virtual to physical server consolidation, who were only actually able to get a 15 to 1 consolidation ratio in reality because their backup application couldn’t handle 25 virtual servers on one physical server, leaving some unprotected.

People could argue that if you properly schedule each virtual machine to backup in a window when all the other systems are not backing up, then perhaps you could get by with traditional backup. The flip side is, IT has been telling me they don’t want to manage the backup process anymore than they have to. So how do you ‘fix’ this problem?

The issue is that backup is a very intensive I/O application therefore there is only one way to fix the problem. You need to reduce the amount of I/O generated and sent through the physical devices that house the virtual servers during backup. Virtual servers were designed to provide a lot of benefits but high I/O capabilities is not one of them. (This is okay, every technology implementation has its tradeoffs. When the positives outweigh the negatives, especially in a substantial way, as they do with virtual servers, you usually have a paradigm shift, and this is what we are seeing with virtual servers.)

So how do you change the I/O pattern of backup? You do so by decreasing the amount of data that is utilizing the shared resources during backup. There are a couple of ways to do this. One way is to leverage the storage array and snapshot the data. Snapshots allow you to make copies of virtualized server data and mount this snapshot to a proxy host and off-load the backups from the physical server that house the virtual servers. The downsides are:

- This becomes a new set of processes to manage unlike traditional backup processes

- You need extra storage capacity with this solution

- You will need to manage another physical server (proxy server)

- You will need more backup agents from your backup software provider

The most efficient way, however, is to take advantage of a new backup software application that leverages data reduction (data deduplication) on the client. Your processes stay the same, there is no need for additional primary storage hardware and by leveraging a ‘smarter’ backup client, you will reduce the I/O tax on your physical server devices and thereby have the ability to maximize your TCO / ROI for your new virtual server environment.

Additionally, a number of these technologies have additional offerings that truly make them next generation. Backup licensing is slowly moving to a capacity based license model. One great feature of these new products is the fact that there is no charge for clients or agents. This allows you to create a virtual server template with the backup agent embedded within it. You no longer have to worry about proliferating backup clients and then paying for all those clients when it is time to ‘true up’ with your backup software vendor. Data deduplication technologies also offer the ability to replicate the backup data efficiently to disk at a remote site so you can develop a more efficient disaster recovery plan that reduces the reliance on a tape and increases your overall operational efficiency.

Regardless of which path you choose, each requires IT to rethink their backup strategies when it comes to protecting virtual server environments.

I encourage you to do two things as you consider moving to a virtual server infrastructure:

- Make sure you are thinking about data protection when architecting your new virtual server environment

- Check out some of the new technologies and best practices offered by vendors for protecting virtual servers.

Hopefully this will help put your virtual server world back on the Road to Recovery!