Rather than leave a lengthy comment on Tom Cook’s blog post from Friday Compression and Dedupe: Business Value and Data Safety (and from a marketing perspective, Friday’s are bad days to post blogs – especially in the summer) – I thought I would respond here (this may get lengthy as Tom made a number of points which I need comment on).

The first thing I do want to say is that when doing technical marketing; the proper strategy would be to not be on defense but rather take an offensive approach. However, given the amount of FUD that Tom put in his latest blog post, I have to defend compression to some degree.

Now, I think we can all agree that data compression and data deduplication are two technologies that can complement one another very well. Avamar (EMC) deduplicates the data at the source and then compresses the data before sending it to the Avamar Data Store gaining tremendous efficiency in network utilization. ProtecTIER (IBM) compresses the data once it is deduplicated at the target device before it stores the data. Other solutions also combine compression and data deduplication.

I’d like to comment on some key point Tom made in his piece where he is just blatantly wrong:

1) Compression identifies redundant data across a very small window, usually 64 KB. – While this may be true for other compression technologies, this is not true for Storwize. Storwize performs compression where the initial window is not fixed in size at all; it is the resultant write that is fixed in size. This size is also specifically mapped to the I/O patter of the data being written. The goal is such that in 1 I/O Storwize can do all the work it needs to on a particular file or LUN and it is for this reason Storwize has no performance penalty.

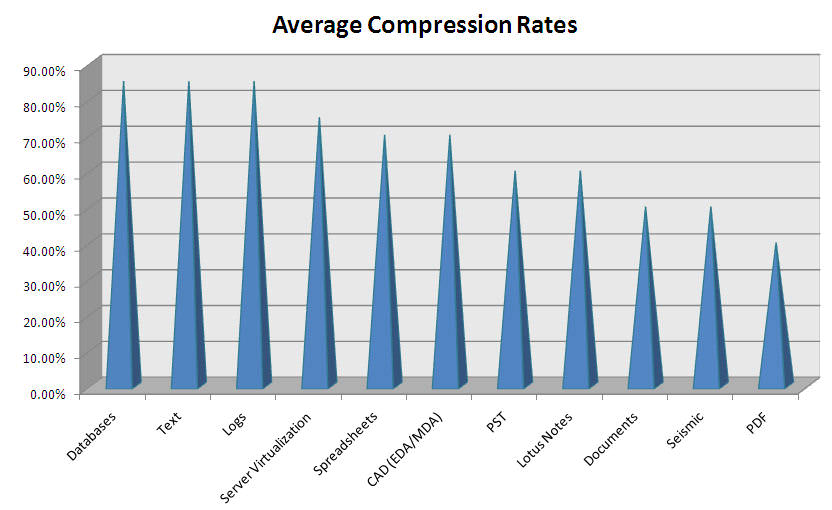

2) Compression produces data reduction rates at most 2X for most data types. – Seems Tom needs a lesson in the most common answer in IT – “IT DEPENDS”. Data compression ratios are 100% tied to the data type. For a true indication of data compression ratios see Figure 1.

Figure 1

Figure 1Compression alters the underlying data structures and requires compression and decompression of data. – If you look up the definition of LZ Compression (in Wikipedia) you get the following: The Lempel-Ziv (LZ) compression methods are among the most popular algorithms for lossless storage. Deduplication is more of a lossy technology as it throws away pieces of data from other files in order to deduplicate data. If any piece of data cannot be reconstructed for any reason, multiple data sets are now corrupt. Additionally, in order for ANY application to work with any data it must be rehydrated, today’s applications must work with data in their native format.

2) Compression operates in the data path and impacts read/write performance as a ‘bump in the wire’ (kudos to Storwize for their work to improve performance). – So we actually do thank Tom for his kind (and accurate words here).

3) Compression is a potential single point of failure for data retrieval. - How would it be different for data deduplication?

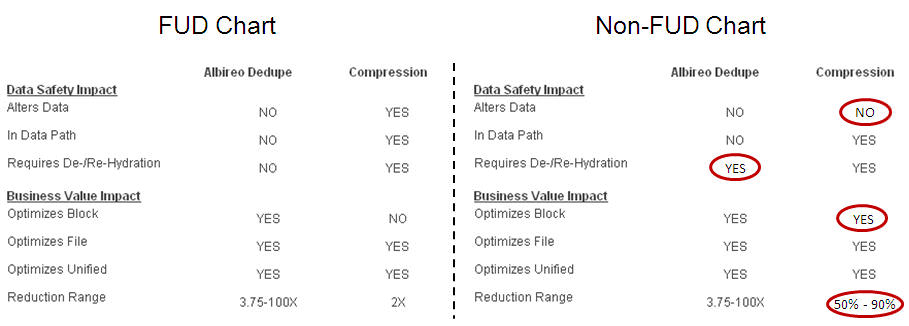

Tom also provided a chart that had a few inaccuracies in it so I took the liberty of fixing some of these as well. See Figure 2

I also want to call Tom out regarding his comment “I am gratified by the response to Albireo by … the press by recent OEM adoption. Albireo is becoming a standard in data optimization…”

Here the term delusional comes to mind only because the links that Tom calls out are links that are focused on Permabit and not OEMs discussing the adoption of deduplication. Additionally, when Permabit announced Albiero there were no OEMs mentioned or quoted as having adopted or even tested the solution. This brings into question how the solution (which was only announced a week ago) is becoming a ‘standard’?

When it comes to providing technical information in any type of written communication, it is important to make sure, if you want to be credible, that the data provided is accurate in order to ensure credibility. A lot of times this required doing research to ensure that the answers you are providing are correct. It is clear that the competitive team or technical marketing team at Permabit has let Tom down here and not provided him with the correct information with regard to how compression works in real life (at least from a Storwize perspective).

This brings me to the tail end of the title of this post. Perhaps a CEO should focus on running their company and driving the ‘OEM’ deals they speak of and doing business development than trying to do any type of marketing or technical marketing. I know our CEO says that he is not a blogger and that some companies have natural bloggers while others do not. I guess this is because he is too busy running the only company that delivers real-time data compression without any performance degradation and helping us to manage hundreds of customers as well as a number of OEM opportunities.