Or should I say, ‘Setting the Record Straight on Backing Up Optimized Data’? Carter discusses on this blog they myriad of ways to perform backups on optimized data. (His blog actually reads more like a white paper explaining how backup needs to be configured to work with his product.) One of the ways Carter describes to do backup is via NDMP and says “… is the most complicated.” The funny thing is that this is the way that 90% of enterprises backup their NAS data. The other scenarios are not quite stated correctly or are again designed to lead users to believe their solution is ‘simple’ when they really add complexity (however, I’ll let the backup community debate that – I have been in backup for 10+ years and I know this won’t go over on them, nor do I want to waste too much blog space). Finally the last scenario they discuss isn’t backup – its replication, but I’ll address that too. Let’s address these one at a time. First, Carter mentions that in some scenarios there is a need to rehydrate data in order to back it up. The process of rehydrating data may not require that the array have the physical capacity to store the data before it is backed up, but the array will require the CPU resources, I/O resources, bandwidth and time to rehydrate to data to back it up. George goes on to say that this situation is “ugly, but not that ugly”. I will tell you any time you put more resource requirements on systems that do backups, your running the risk that backups won’t get done. One of the greatest challenges in IT is backup. Backup administrators are running into backup window problems all the time. Data is growing not shrinking; having to do more work on more data in order to protect it is a recipe for failure. In my previous comments I may have incorrectly stated you need more disk space to do the backups, but I did correctly state that the array will require more system resources. And where do these resources come from? When the system is idle? When is your storage array idle? Now, what if all you had to do was – well nothing. Storwize sits in front of primary storage and stores your data, compressed, in real-time with no performance impact and preserving the envelope of the data file. Then when it comes time to backup, the backup administrator does absolutely nothing different that he/she did yesterday. Same shares are backed up, same clients, and all the work is done by the Storwize appliance, there is no load on the filer. The next question is can Storwize keep up with the backup stream and the answer is YES. As you saw in the Wikibon CORE blog, our time to compress is on the order of magnitude of milliseconds – the time to decompress is even less. (I should also mention one thing Carter failed to mention, in order for backups to come off their system ‘transparently’ you need a software agent on the client – who wants to manage more clients?

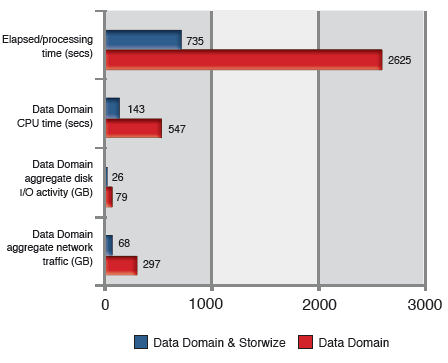

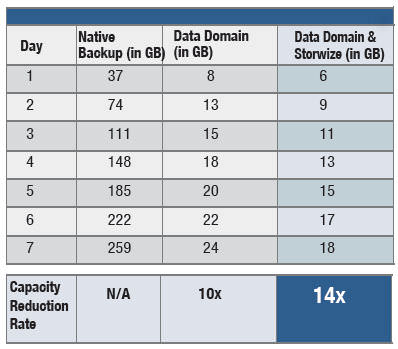

Carter also states that, “It should be noted, though, that Data Domain results will be slightly worse with either Storewize or Ocarina compression – because compressing data makes it harder to find duplicates.” Now I hate to argue theory and I have been speaking about the advantages of random access compression, specifically with deduplication so I guess now I will show the results we have from live testing. The fact of the matter is, Storwize makes deduplication better. Because Storwize stores data in random access nature inside the compressed file, just like a regular file, the deduplication ratios are preserved and are identical to non-compressed files. But don’t take my word for it, the chart on the left shows we made Data Domain faster and more efficient by pushing less raw data to the device. The chart on the right shows that we made Data Domain more efficient in its optimization (good for the customer). I would like to see the same charts from other vendors. Two other notes to mention. First, if you consider snapshots a method of backup, then you need to pay special attention to your snapshot process with post process optimization solutions. When using a post process optimization solution users MUST wait for the optimization process to complete before creating a shapshot or you will snap an un-optimized file which will cause you not to save space. Additionally, when you backup a snapshot, you need to restore the entire snapshot in order to restore a single file. Because the Storwize solution is in-band, users can perform snapshots during the compression process and all files will be optimized because Storwize writes all files in the compressed format. The second thing to consider is backing up deduplicated data. In a NAS environment, if the primary storage array is doing the actual deduplication, all data has to be backed up in its full un-optimized form using NDMP (even if it will be deduplicated on the secondary device, if the devices don’t use the same deduplication algorithms, then the data must be rehydrated). This increases IO and load on the filer substantially. The same situation occurs with an external solution, however the rehydration may take place in a different location, but still impact the overall backup performance. Additionally, it is important to remember that a deduplication process creates dependencies between files/blocks/objects which the storage is not aware of. Backing up this data thru NDMP forces external deduplication solution to be involved in the backup process which significantly complicates backup administration and more importantly adds risk and too many elements of your backup and restore processes. So, the bottom line, if the storage array is deduplicating the data, you backup the unoptimized/undeduplicated data, which can still be deduplicated on the backup target, but you are not getting any benefits in terms of shorter backups. If an external solution performs the deduplication, then that solution is added into the mix of storage, external_dedupe, backup_software, backup_target, - this just sounds like a backup bouillabaisse that wouldn’t sit well with any backup administrator. Finally, and perhaps the most common backup for enterprise NAS systems is NDMP. Ocarina will talk about a ‘dedupe aware’ NDMP – is this some special NDMP that they wrote? Does it work for all files? Do you use it only for the Ocarina data and then the standard NDMP for non-Ocarina data? This again is just adding complexity. The value of Storwize – transparency. Install Storwize and EVERYTHING from your applications to your backup work EXACTLY as they did before – just faster – with nothing else to install – that seems pretty ‘effective’ and very simple to me. Look, at the end of the day technology solutions are as much about solving the business issue as it is about the end user. Great technology that solves a problem but has impact to end users or to IT process isn’t a solution at all. I have spent 10+ years of my career in customer support, 2+ years running professional services, 5+ year evangelizing products with customer input and feedback and 4+ years as an Analyst writing about technology for end users. I can tell you with 100% certainty that every customer environment is different and it is why many solutions exist. I will also tell you that I choose to work for companies that have solutions that are next generation, evolutionary and add real value to the end user. Steve said it best when he talked about the Virtual System Administrator. Their job is hard, backup is hard, let’s not make it any harder. I fully admit I don’t know everyone’s solutions as well as I know the ones I represent, but I did choose the ones I represent for a reason and after a good deal of due diligence and Storwize not only adds technical value, saves customers a lot of money, it seamlessly and transparently fits into your storage environment with no impact to performance, end users or process. That is real value!