Each year there tends to be one technology that stands out in the storage space. In 2009 it was data deduplication. At the end of 2008 EMC made an acquisition of a source based deduplicaiton solution called Avamar. Later, in 2009, they announced a strategic partnership with Quantum for data deduplication at the target. Then in 2009 EMC made a bid against NetApp for Data Domain and won. In addition, NetApp had data deduplication announcements with its ASIS technology. Quantum, Falconstor, and Symantec all had their own story with data deduplication and a host of non-public companies such as Permabit, Sepaton, and Exagrid all were talking about the merits of data deduplication.

As the story goes, if you haven't put data deduplication in your backup environment yet you're either in an environment where there is not one iota of duplicate data, which is highly unlikely, or the company you work for has gobs of money and has no problem:

- Backing up to slow tape

- No worries about slow recovery from tape

- Keeping massive amounts of data on unreliable tape

- Backing up full streams of data to disk (and wasting valuable storage space)

What I am saying is that if you haven't implemented a data deduplication solution by now, you have been left in the technology dust. Data deduplication just makes too much sense. I know we have all heard the expression "No one ever got fired for buying X." But has anyone ever got promoted because they bought X? I have to believe that the IT team that can save their company 50% or more of their storage will get promoted. Storage is a cost drain on IT. It's the applications that make a company money. Its time to start focusing some of those valuable IT dollars on the applications that make your company money, its time to be the IT Super Hero!

Real-time, Random Access Compression in 2010

In 2010 the main topic seems to be optimization for primary storage. There have been a number of industry articles just in the last 10 days that discuss primary storage optimization. The reality is that if you are going to make an impact on storage growth you need to attack the problem where it starts, with primary storage. There are a number of reasons for this. First, if you listen to the Webinar put out by Storwize and IBM - John Powers from IBM states that "the industry is out of tricks" when it comes to disk drives continuing on the aerial density curve to give users 2x as much capacity in the same space. New technologies have to be used in order to maintain this trend (at least for the foreseeable future) and this is how compression or capacity optimization will play a key role in the disk drive, aerial density situation. Additionally, optimization technologies can play a key role in helping the cost of SSD drives become more competitive.

It is important to note, as most of the articles written in the last few days point out, in order to do primary storage optimization, there can be no impact to performance. The important point W. Curtis Preston points out in his article, "Dedupe and Compression Cuts Storage Down to Size" is "The No. 1 rule when introducing a change in your primary data storage system is primum non nocere, or “First, do no harm.”" This means vendors who provide optimization solutions to customers cannot impact the fundamental reasons why end users buy storage. Jeff Byren and Jeff Boles of the Taneja Group published an article recently in Infostor "Consider Compression for Primary Storage Optimization" that also states:

..."We believe that a data reduction technology must meet the following criteria to be considered PSO-capable in the enterprise:

• Reliably and consistently reduce primary storage capacity requirements by 50% or more (depending on the data type)

• Do not degrade performance of primary storage in terms of I/O or latency, even for data streams that are fully sequential or completely random I/O

• Completely preserve the original data set

• Provide full transparency (requires no changes to existing IT infrastructure or processes)"...

Providing users with a solution that can reduce storage capacity by 50% or greater has a significant impact to a company's overall storage costs, but it cannot negatively impact the existing requirements for performance and availability or change any process. Doing so could negate the value of an optimization solution.

Where to Optimize?

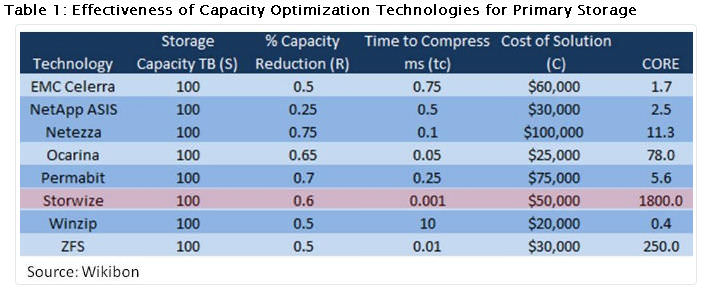

Ocarina Networks recently published a blog stating that they do 'in-line' storage optimization for primary storage. (The post did focus on the fact that they do prefer to operate in a post-process mode. This probably has much do to the impact the solution would have on the bullets outlined from the Taneja article.) As we look to the different technologies that can fit the bill at being able to provide 'real-time' optimization for primary storage, we turn to Dave Vellante's piece on "Dedupe Rates Matter... Just Not as Much as You Think". Here Vellante takes a scientific approach to proving how optimization adds real value to the end user. Dave's CORE (Capacity Optimization Ratio Effectiveness) ties together:

- Optimization ratio

- Horsepower required to achieve this ratio

- Cost

Dave states that if the solution does not achieve a CORE above 1000, then there is no point trying to use it in real-time. This does not mean that real-time optimization can't be done with the solutions he lists here, but in order to do so, it would require throwing much more horsepower at the solution that it would drive the cost of the solution too high.

And finally, Tom Trainer from Network Computing wrote a nice piece "Storwize Focuses on Optimization Without Compromise". Now just to be clear, I am a Storwize employee and Tom's piece as very positive on Storwize. Interestingly though, piece actually talks about customers who have used the Storwize technology to solve real storage challenges without negatively impacting their storage performance or processes. (There is also a Wikibon article that points to a customer, Shopzilla, who uses Storwize to save on their storage capacity without any performance degradation.)

At the end of the day, my point is that primary storage optimization is quickly becoming Storage's 2010 hottest technology. There have been a number of articles on the topic all saying the same thing, if your going to deploy primary storage optimization, make sure you preserve all of the characteristics of that primary storage including, performance, availability, and transparency to all applications (including your storage functions such as snapshots) as well as preserve all of the downstream IT processes for that storage. Vendors without real solutions are worried that the startups with great solutions will impact their disk sales. They are being very short sighted. Primary storage optimization is too important to the customer, it adds too much value. Additionally, having been in the storage business for 20 years, disk is elastic. Any time you provide solutions that allow customers to save storage space, end users find reasons to fill it up. Don't be left behind on this trend, the technology is available for IT to start saving a great deal of money on storage capacity, floor space, power and cooling and focusing their IT budgets on solutions that make their company money. Become that IT Super Hero!