How many of you have heard that compression and deduplication just don’t belong together? Like oil and water. I know from experience, when I worked for EMC, the Avamar sales reps and the Data Domain sales reps would tell their customers that the best thing to do if they had encrypted or compressed primary data, that they uncompress it to get the savings in their backups that deduplication promises.

This is wrong on a number of levels. First, the shear nature of telling a customer to not compress primary storage data only to get down stream benefits is counter intuitive. Second, if the customer has already changed their processes in order to accommodate compressed primary data, then the deduplication backup vendor is asking their customers to again change the customer’s process. Not to mention it costs the customer more money in primary storage, and lastly undermines the decision made by the customer to compress the data in the first place. If you really want to insult your customer, tell them the decision they made to save money was a bad one. Finally, all data deduplication technologies utilize LZ compression on their data ‘chunks’ to further reduce their data size, and then use this added compression benefit to talk about their deduplication ratios.

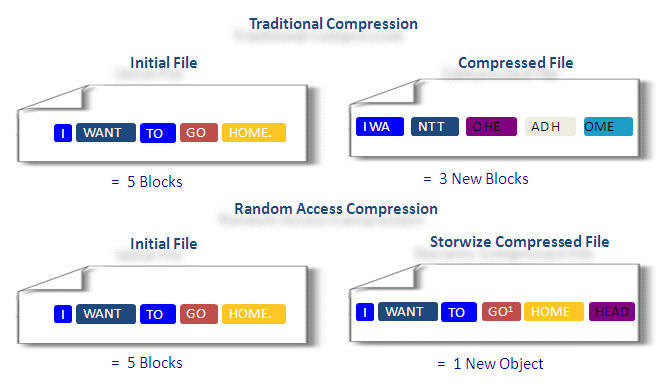

The reality is, with traditional compression implementations, the affects of deduplication are not significantly realized. The reason is due to how traditional compression writes the files it compresses. If a file is changed, from the point of the change, through the rest of the file, the new compressed file is essentially a new file. When deduplication (even variable block deduplication) looks at this file and finds the initial changed blocks, the rest of the file will also be different and the deduplication ratios will be significantly reduced. (Essentially it turns the highly affective ‘variable block’ deduplication into ‘fixed block’ deduplication and research shows that fixed block deduplication is 3 to 5 times less efficient than variable block deduplication. Now that you’ve spent all that money for an expensive variable block solution, are you really getting the benefits?)

So what is the answer? The answer is to leverage a compression implementation for primary storage than utilizes ‘random access’ compression. Random access compression differs from traditional compression implementations because random access compression has the capability of updating only small segments inside a compressed file versus changing the file from the point of the modification on.

One of the main benefits of compressing primary data utilizing a random access implementation is that is has very little affects on your downstream IT processes including backup and even your next generation data deduplication backups. Because fewer blocks are changed in the file compressed with random access techniques, backup with variable block deduplication not only continues to maintain its deduplication ratios but also backs up smaller blocks of data, increasing backup performance.

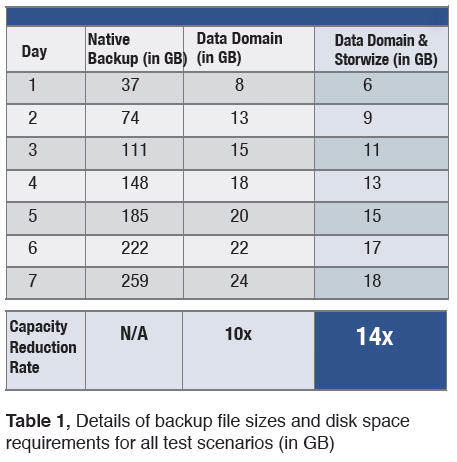

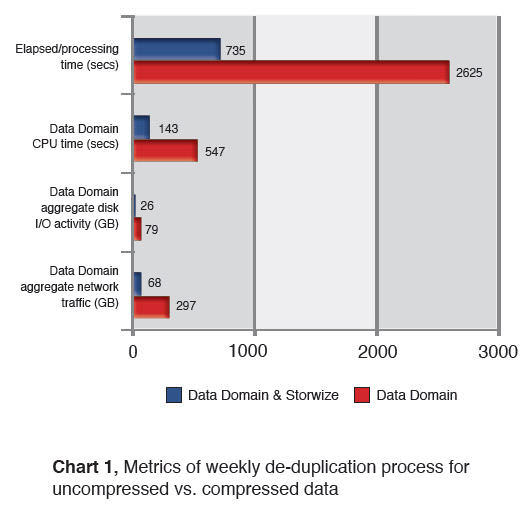

Storwize utilizes random access compression and Storwize has tested its deduplication with Data Domain and proved that deduplication ratios were maintained and backup performance increased (see the full paper at www.storwize.com)

Storwize compresses data using it’s RACE™ architecture. Its real-time random access nature and transparency in the environment provide an overwhelming number of benefits including:

- Maximum compression

- Increased storage performance (while they like to say ‘no performance degradation’ the reality is it boosts performance)

- No change to any downstream processes – including next generation backup with data deduplication

The message to IT is, you don’t have to change your processes to maximize your storage savings (primary and backup), you may just need to utilize the right technology at the right storage tier to get the maximum benefits throughout your storage infrastructure. Remember, it’s not about compression, but the implementation of compression that affords you the luxury of no change to any of your downstream processes, including deduplication. So next time your deduplication vendor tells you to uncompress your primary storage before you backup, tell them you don’t need to because you use Storwize.