I find myself in a true quandary. First, I have true admiration for my good friend and fellow blogger 3Par Farley and never feel comfortable being on the other side of the coin from him. Second, I find myself agreeing, to a degree, with Jon Toigo (who still uses crazy permalinks and considers Novell a serious storage player. What is up with that?).

I’m sure by now most of you all have read the fury lately over Tom Georgens’ comments about the future of storage tiering. A number of folks who have ‘tiering’ technology reacted with disdain (see a list on Storagerap). Some wondered how a storage visionary like Tom could turn his back on technology that helps people save money in storage. Some even suggested that this is just marketing to overcome deficiency in the NetApp product line. However, one applauded Tom for understanding how the real world deploys storage. All good points, but I have my own theory on storage teiring...

I want to come right out and say I think that storage tiering is an incredibly smart concept. (Now that that is off the table…) I would also say that much like the prediction that tape is ‘dead’ (I guess Data Domain didn’t get that memo), storage tiering, while it can’t be dead, because in reality, it never actually was, nor do I think it will be for a very long time. Let’s look at the facts:

First, HSM never really went anywhere. There is not mass adoption of HSM technology. Second, tiering is not a technology issue. Humans are lazy. What do I mean? HSM / Tiering or whatever you want to call it depends on policy. IT can’t get any two groups in a company to decide on anything other than storage is too expensive. When I speak to well respected people in IT the ‘real world’ (my dad), they tell me it is too difficult to get organizations to agree on when data can be archived in order to save money (and that is what this is all about really). Finally, IT processes get in the way of a good tiering strategy. Getting data to go one way is easy – move data to cheaper and cheaper tiers of storage until it vanishes. Try getting it back. That takes a lot of management tools and integration and costs just as much as doing nothing.

Remember back 8 or 10 years ago when blogs didn’t exist, and magazines did? On the back page of one of those storage trade rags, I recall that Steve Duplessie picked Tom as the ‘Smartest Storage-Guy of 200x.’ I can’t remember which year, and Google isn’t helping, but that isn’t the point. Tom is too smart to say something as frivolous as ‘tiering is dead’ by mistake. He is also not a marketing guy. So I’m placing my bets that his point is, lets help users utilize storage the way they like to consume it, simply.

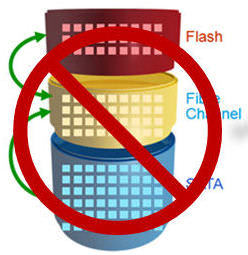

The most basic value proposition for tiering is to save money inside the domain of the storage array. Tiering moves data to lower cost disk technology according to a pre-defined policy. If your policy reflected the last time some data was accessed, tiering software would put your most active data on your highest tier of storage, perhaps SSD, and your ‘stale’ data moves to SATA. For that luxury, you get:

a) To fight with all the organizations within the company to decide on a policy as to when it is actually okay to move the data

b) To spend money on a vendor’s tiering software, and pay maintenance fees, and learn how to use new software.

c) Hope that the application doesn’t throw you a curve and want the SATA data quickly, because then you need to hurry and move it back to SSD, which would be inefficient and could be prone to error (at least historically it has).

So I think what Tom is challenging you to think about is, are you spending that money on tiering software wisely? Vendors will tell you that it pays for itself, but does it really? Despite the efforts of all tiered solutions to be truly autonomic, the reality is that they can’t replace a person’s decision making process, and if you could get all of your data to tier the way every organization would want then tiering would be a disruption to your process. Additionally, I haven’t heard of a vendor offering a heterogeneous tiering solution, and not many customers buy all their storage from one vendor (as much as EMC would like this) so in the end, there really isn’t one good product available to do storage tiering so you would need many. If this is the case, then you need people to manage all the software and tiering policies. I thought we said this was supposed to save us money?

The hidden OPEX associated with figuring out how tiering works from each vendor in your environment will ultimately make you take pause before you deploy. Maybe that is too much complexity to deal with for the benefit you get.

Part of the reason this discussion reared it’s ugly head has more to do with marketing than anything else. EMC launched flash drives last year and told customers that “Capacity pricing is no longer about $/GB but $/GB/IO.” (Of course, if you can’t sell on the rules of the game, change the rules.) The problem is, no matter what the rules are, budgets are finite. Selling customers on SSD (higher margin drives) meant that if users were going to buy these drives, they could only afford to put the data requiring the highest performance there so they would need to move data to a lower tier. EMC said, “right, so we can also sell you FAST to help you with the tiering”. The problem is, as Mark points out, it is 1.0, doesn’t work well yet and besides, for all the reasons we outlined above with regard to human nature, it really just isn’t going to take off (though I am sure the marketing group at EMC will ‘show’ otherwise).

On the other hand HDDs and Flash keep getting cheaper, so you might convince yourself that you are just fine riding that disk cost curve and working on other pressing matters, rather than deploying new tiering software. If you take pause, maybe everyone else will as well, which means that maybe today's hype on tiering will never will be deployed widely across the industry. Is it possible that this is what Tom was thinking?

To me, it boils down to something I’ve said many times: new technology is easy to introduce into the data center, but new process is not. Tiering runs the risk of disrupting process, which means buying behavior will be slow. Plus, maybe there are other ways to reduce cost rather than using tiering.

For example, as Duplessie points out in his blog Random Thoughts for a Friday, he points out that “primary storage data reduction is going to be an in vogue conversation by the end of this year”. So if Real-time, random access compression to primary storage can give IT what they want, significantly cheaper storage, no performance impact, maintain high availability, and be agnostic to any storage (heterogeneous) why wouldn’t they do that versus try to figure out storage tiering? Primary storage compression takes the notion of storage tiering as a requirement and pushes it out 5 years and who knows, by then, it may work and be automated.

Now can't we all just get along and have No More Tiers?